-

Iyer, Kishan win back India contracts as Pant's deal upgraded

Iyer, Kishan win back India contracts as Pant's deal upgraded

-

Vance lands in India for tough talks on trade

-

Inside South Africa's wildlife CSI school helping to catch poachers

Inside South Africa's wildlife CSI school helping to catch poachers

-

Nigerian Afrobeat legend Femi Kuti takes a look inward

-

Kim Kardashian: From sex tape to Oval Office via TV and Instagram

Kim Kardashian: From sex tape to Oval Office via TV and Instagram

-

Vance in India for tough talks on trade

-

Thunder crush Grizzlies as Celtics, Cavs and Warriors win

Thunder crush Grizzlies as Celtics, Cavs and Warriors win

-

Vance heads to India for tough talks on trade

-

China slams 'appeasement' of US as nations rush to secure trade deals

China slams 'appeasement' of US as nations rush to secure trade deals

-

'Grandpa robbers' go on trial for Kardashian heist in Paris

-

Swede Lindblad gets first win in just third LPGA start

Swede Lindblad gets first win in just third LPGA start

-

Gold hits record, dollar drops as tariff fears dampen sentiment

-

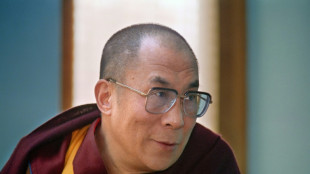

As Dalai Lama approaches 90, Tibetans weigh future

As Dalai Lama approaches 90, Tibetans weigh future

-

US defense chief shared sensitive information in second Signal chat: US media

-

Swede Lingblad gets first win in just third LPGA start

Swede Lingblad gets first win in just third LPGA start

-

South Korea ex-president back in court for criminal trial

-

Thunder crush Grizzlies, Celtics and Cavs open NBA playoffs with wins

Thunder crush Grizzlies, Celtics and Cavs open NBA playoffs with wins

-

Beijing slams 'appeasement' of US in trade deals that hurt China

-

Trump in his own words: 100 days of quotes

Trump in his own words: 100 days of quotes

-

Padres say slugger Arraez 'stable' after scary collision

-

Trump tariffs stunt US toy imports as sellers play for time

Trump tariffs stunt US toy imports as sellers play for time

-

El Salvador offers to swap US deportees with Venezuela

-

Higgo holds on for win after Dahmen's late collapse

Higgo holds on for win after Dahmen's late collapse

-

El Salvador's president proposes prisoner exchange with Venezuela

-

Gilgeous-Alexander, Jokic, Antetokounmpo named NBA MVP finalists

Gilgeous-Alexander, Jokic, Antetokounmpo named NBA MVP finalists

-

Thomas ends long wait with playoff win over Novak

-

Thunder rumble to record win over Grizzlies, Celtics top Magic in NBA playoff openers

Thunder rumble to record win over Grizzlies, Celtics top Magic in NBA playoff openers

-

Linesman hit by projectile as Saint-Etienne edge toward safety

-

Mallia guides Toulouse to Top 14 win over Stade Francais

Mallia guides Toulouse to Top 14 win over Stade Francais

-

Israel cancels visas for French lawmakers

-

Russia and Ukraine trade blame over Easter truce, as Trump predicts 'deal'

Russia and Ukraine trade blame over Easter truce, as Trump predicts 'deal'

-

Valverde stunner saves Real Madrid title hopes against Bilbao

-

Ligue 1 derby interrupted after assistant referee hit by projectile

Ligue 1 derby interrupted after assistant referee hit by projectile

-

Leclerc bags Ferrari first podium of the year

-

Afro-Brazilian carnival celebrates cultural kinship in Lagos

Afro-Brazilian carnival celebrates cultural kinship in Lagos

-

Ligue 1 derby halted after assistant referee hit by projectile

-

Thunder rumble with record win over Memphis in playoff opener

Thunder rumble with record win over Memphis in playoff opener

-

Leverkusen held at Pauli to put Bayern on cusp of title

-

Israel says Gaza medics' killing a 'mistake,' to dismiss commander

Israel says Gaza medics' killing a 'mistake,' to dismiss commander

-

Piastri power rules in Saudi as Max pays the penalty

-

Leaders Inter level with Napoli after falling to late Orsolini stunner at Bologna

Leaders Inter level with Napoli after falling to late Orsolini stunner at Bologna

-

David rediscovers teeth as Chevalier loses some in nervy Lille win

-

Piastri wins Saudi Arabian Grand Prix, Verstappen second

Piastri wins Saudi Arabian Grand Prix, Verstappen second

-

Kohli, Rohit star as Bengaluru and Mumbai win in IPL

-

Guirassy helps Dortmund past Gladbach, putting top-four in sight

Guirassy helps Dortmund past Gladbach, putting top-four in sight

-

Alexander-Arnold lauds 'special' Liverpool moments

-

Pina strikes twice as Barca rout Chelsea in Champions League semi

Pina strikes twice as Barca rout Chelsea in Champions League semi

-

Rohit, Suryakumar on song as Mumbai hammer Chennai in IPL

-

Dortmund beat Gladbach to keep top-four hopes alive

Dortmund beat Gladbach to keep top-four hopes alive

-

Leicester relegated from the Premier League as Liverpool close in on title

Inbred, gibberish or just MAD? Warnings rise about AI models

When academic Jathan Sadowski reached for an analogy last year to describe how AI programs decay, he landed on the term "Habsburg AI".

The Habsburgs were one of Europe's most powerful royal houses, but entire sections of their family line collapsed after centuries of inbreeding.

Recent studies have shown how AI programs underpinning products like ChatGPT go through a similar collapse when they are repeatedly fed their own data.

"I think the term Habsburg AI has aged very well," Sadowski told AFP, saying his coinage had "only become more relevant for how we think about AI systems".

The ultimate concern is that AI-generated content could take over the web, which could in turn render chatbots and image generators useless and throw a trillion-dollar industry into a tailspin.

But other experts argue that the problem is overstated, or can be fixed.

And many companies are enthusiastic about using what they call synthetic data to train AI programs. This artificially generated data is used to augment or replace real-world data. It is cheaper than human-created content but more predictable.

"The open question for researchers and companies building AI systems is: how much synthetic data is too much," said Sadowski, lecturer in emerging technologies at Australia's Monash University.

- 'Mad cow disease' -

Training AI programs, known in the industry as large language models (LLMs), involves scraping vast quantities of text or images from the internet.

This information is broken into trillions of tiny machine-readable chunks, known as tokens.

When asked a question, a program like ChatGPT selects and assembles tokens in a way that its training data tells it is the most likely sequence to fit with the query.

But even the best AI tools generate falsehoods and nonsense, and critics have long expressed concern about what would happen if a model was fed on its own outputs.

In late July, a paper in the journal Nature titled "AI models collapse when trained on recursively generated data" proved a lightning rod for discussion.

The authors described how models quickly discarded rarer elements in their original dataset and, as Nature reported, outputs degenerated into "gibberish".

A week later, researchers from Rice and Stanford universities published a paper titled "Self-consuming generative models go MAD" that reached a similar conclusion.

They tested image-generating AI programs and showed that outputs become more generic and strafed with undesirable elements as they added AI-generated data to the underlying model.

They labelled model collapse "Model Autophagy Disorder" (MAD) and compared it to mad cow disease, a fatal illness caused by feeding the remnants of dead cows to other cows.

- 'Doomsday scenario' -

These researchers worry that AI-generated text, images and video are clearing the web of usable human-made data.

"One doomsday scenario is that if left uncontrolled for many generations, MAD could poison the data quality and diversity of the entire internet," one of the Rice University authors, Richard Baraniuk, said in a statement.

However, industry figures are unfazed.

Anthropic and Hugging Face, two leaders in the field who pride themselves on taking an ethical approach to the technology, both told AFP they used AI-generated data to fine-tune or filter their datasets.

Anton Lozhkov, machine learning engineer at Hugging Face, said the Nature paper gave an interesting theoretical perspective but its disaster scenario was not realistic.

"Training on multiple rounds of synthetic data is simply not done in reality," he said.

However, he said researchers were just as frustrated as everyone else with the state of the internet.

"A large part of the internet is trash," he said, adding that Hugging Face already made huge efforts to clean data -- sometimes jettisoning as much as 90 percent.

He hoped that web users would help clear up the internet by simply not engaging with generated content.

"I strongly believe that humans will see the effects and catch generated data way before models will," he said.

P.Vogel--VB